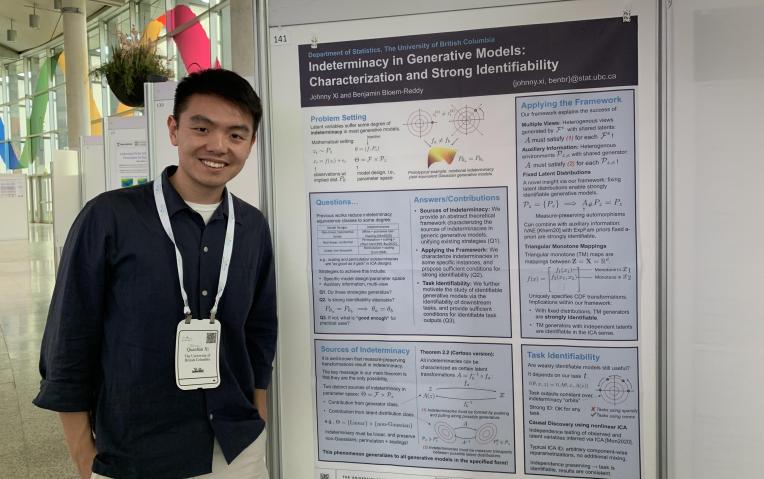

Quanhan Xi

Doctor of Philosophy in Statistics (PhD)

Research Topic

Representing latent interventions in generative models

Theses completed in 2010 or later are listed below. Please note that there is a 6-12 month delay to add the latest theses.

In applied ecology, especially in fields related to forest ecology, there is a need to make long-term model projections under predicted future conditions. Present methods for model selection do not emphasize reducing risk in the setting of extrapolation, and are often inappropriate for use in a setting where the distribution of predictor variables changes between training and testing (predictive) settings. A method proposed for a domain generalization setting is extended to include linear mixed-effect models, a common class of models used in ecological settings. The invariant models method is based on selecting models which produce independent residuals across tasks, assuming that this conditional independence will also apply to the test task. The performance of this proposed method is evaluated using simulations and an example forest ecology dataset.

View record

Latent variable models posit that an unobserved, or latent, set of variables describe the statistical properties of the observed data. The inferential goal is to recover the unobserved values, which can then be used for a variety of down-stream tasks. Recently, generative models, which attempt learn a deterministic mapping (the generator) from the latent to observed variables, have become popular for a variety of applications. However, arbitrarily different latent values may give rise to the same dataset especially in modern non-linear models, an issue known as latent variable indeterminacy. In the presence of indeterminacy, many scientific problems which generative models aim to solve become ill-defined. In this thesis, we develop a mathematical framework to analyze the indeterminacies of a wide range of generative models by framing it as a special type of statistical identifiability. By doing so, we unify existing model-specific derivations from various corners of the diverse literature on identifiability in latent variable models. Using our framework, we also derive conditions to eliminate indeterminacies completely while maintaining the flexibility of modern methods. Using these conditions, we are able to target precisely the sources of indeterminacy to derive novel results on the weak and strong identifiability of popular generative models, and variations thereof.

View record

Boosting variational inference (BVI) approximates Bayesian posterior distributions by iteratively building a mixture of components. However, BVI requires greedily optimizing the next component—an optimization problem that becomes increasingly computationally expensive as more components are added to the mixture. Furthermore, previous work has only used simple (i.e., Gaussian) component distributions; in practice, many of these components are needed to obtain a reasonable approximation. These shortcomings can be addressed by considering components that adapt to the target density. However, natural choices such as MCMC chains do not have tractable densities and thus require a density-free divergence for training. As a first contribution, we show that the kernelized Stein discrepancy—which to the best of our knowledge is the only density-free divergence feasible for VI—cannot detect when an approximation is missing modes of the target density. Hence, it is not suitable for boosting components with intractable densities. As a second contribution, we develop locally-adaptive boosting variational inference (LBVI), in which each component distribution is a Sequential Monte Carlo (SMC) sampler, i.e., a tempered version of the posterior initialized at a given simple reference distribution. Instead of greedily optimizing the next component, we greedily choose to add components to the mixture and perturb their adaptivity, thereby causing them to locally converge to the target density; this results in refined approximations with considerably fewer components. Moreover, because SMC components have tractable density estimates, LBVI can be used with common divergences (such as the Kullback–Leibler divergence) for model learning. Experiments show that, when compared to previous BVI methods, LBVI produces reliable inference with fewer components and in less computation time.

View record

The primary goal of factor analysis is to uncover a set of latent factors that can explain the variation in the data. Principal Component Analysis is one approach that estimates the factors by a set of orthogonal vectors. However, it may be difficult to interpret the factors as-is, and so it is common to rotate the estimated factors to make their coefficients as sparse as possible to improve interpretability. Varimax is the most popular method for factor rotations, and its statistical properties have been studied in recent literature. Entromin is another factor rotation method that is less commonly used and not as well-studied, but there exists conventional wisdom that Entromin generally finds sparser rotations compared to Varimax. In this thesis, we aim to explain the sparsity claim for Entromin by studying its statistical properties. Our main contributions include several theoretical results that take steps towards this aim. We show that Varimax is a first-order approximation of Entromin, and that generalizing this connection leads to a family of Entromin approximations. We derive the conditions under which the second-order approximation can be viewed as performing statistical inference in a latent factor model. We then make the connection between optimizing the Entromin criterion and recovering sparsity in the factors. Other contributions of this thesis include novel connections to statistical concepts that have not been made in the literature to our knowledge, and an empirical study of Entromin on a dataset of New York Times articles.

View record

A useful step in data analysis is clustering, in which observations are grouped together in a hopefully meaningful way. The mainstay model for Bayesian nonparametric clustering is the Dirichlet process mixture model, which has one key advantage of inferring the number of clusters automatically. However, the Dirichlet process mixture model has particular characteristics, such as linear growth in the size of clusters and exchangeability, that may not be suitable modelling choices for some data sets, so there is further research to be done into other Bayesian nonparametric models with characteristics that differ from that of the Dirichlet process mixture model while maintaining automatic inference of the number of clusters.In this thesis, we introduce the Neutral-to-the-Left mixture model, a family of Bayesian nonparametric infinite mixture models which serves as a strict generalization of the Dirichlet process mixture model. This family of mixture models has two key parameters: the distribution of arrival times of new clusters, and the parameters of the stick breaking distribution, whose customization allows the user to inject prior beliefs regarding the structure of the clusters into the model. We describe collapsed Gibbs and Metropolis–Hastings samplers to infer the posterior distribution of clusterings given data. We consider one particular parameterization of the Neutral-to-the-Left mixture model with characteristics that are distinct from that of the Dirichlet process mixture model, evaluate its performance on simulated data, and compare these to results from a Dirichlet process mixture model. Finally, we explore the utility of the Neutral-to-the-Left mixture model on real data by applying the model to cluster tweets.

View record

If this is your researcher profile you can log in to the Faculty & Staff portal to update your details and provide recruitment preferences.