Though 'Deep Learning' - a subset of machine learning - has achieved near human accuracy with Natural Language Processing, an overwhelming portion of the world's languages lack the online resources and data to take advantage of that technology. Ife combines linguistics and computer science to fill this major gap for six major African languages by developing the resources and technologies for these languages and making all this data publicly accesible at all levels.

Research Description

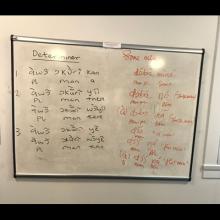

Deep learning, one of the most successful methods for Natural Language Processing (NLP) has achieved near human accuracy on many NLP tasks for which large amounts of (labeled) data are available. Unfortunately, the majority of the world’s 7,000 languages including 2,000 languages in Africa are low resource languages (LRL), do not have adequate resources. By implication, technologies developed using this NLP technique excludes many African languages. This research which forms the main part of my dissertation “Inclusive by Design: Natural Language Technology for Africa”, will focus on: (1) developing technologies that would produce sufficiently accurate results for LRLs with emphasis on six African languages: Akan, Hausa, Swahili, Yoruba, and Zulu. (2) Engaging in ongoing public discourse on language diversity and inclusion in technology. I propose to answer three scientific questions: R1: To what extent can mainstream deep learning methods developed for resource rich languages be adapted to LRL? R2: In the absence of human-labeled data, what is the utility of applying linguistic knowledge to solve specific NLP problems on LRL? R3: How can language policy be applied to aid language diversity in technology? Although this work will be published in academic venues, the tools and data created will be accessible to Africans and the wider public to aid diversity in technology. R1 and R2 will occur in four phases: (1) corpus creation by crawling the public web, public social media platforms and documenting local media. (2) data cleaning using automatic and manual methods (3) exploring language specific linguistic rules to bootstrap data annotation (4) propose, justify, and implement deep learning approaches to develop tokenizers, part of speech taggers, language models, machine translation, and named entity recognition models. R3 will involve active collaboration with UBC Language Sciences Initiative, CAIDA, African Studies, and Liu Institute Network for Africa; as well as African Languages Technology Initiative (Alti), Squiggle Park and Facebook Research. ALTi will provide technical support on data collection, and annotation, while Facebook Research will be a context to mobilize the work to the larger society. The outcomes of this work are: (1) publicly accessible data (2); publicly accessible technology for African languages; (3) new knowledge related to developing deep-learning-based NLP models for low resource languages; (4) public engagement through social media, blog posts and workshops aimed at influencing language policy to aid inclusion. Although there has been growing interest in African NLP in recent years, there are few or no tokenizers, or part of speech taggers, for the languages selected and only few language models, machine translation, and named entity recognition models exist. My academic training in linguistics and computer science as well as my African heritage strategically positions me to proffer solutions that are not only computationally efficient but also sensitive to the languages and cultures of native and Indigenous communities.

What does being a Public Scholar mean to you?

A public scholar is one whose work has the good of people as its ultimate goal. If society would not benefit from a research activity then its no good.

In what ways do you think the PhD experience can be re-imagined with the Public Scholars Initiative?

The Public Scholars Initiative is an important one. I believe it will help researchers think deeply about the importance of their work within society and ensure that their research improves society.

How do you envision connecting your PhD work with broader career possibilities?

The corpus and tools created will be accessible to the public under the creative commons license: which is very valuable for low resource language research. The tools and resources developed will also directly impact six African communities and will enable approximately 242 million people to read messages automatically translated into their language from a different language; have access to education, health, and recreational services; and for many others to create tools that recognize African languages and make less biased decisions about African people. As an African, ongoing collaborations, and opportunities for more collaborations will strengthen valuable relationships with various stakeholders and skills that will be useful even after graduation for my intended career in developing language technology for minority languages.

How does your research engage with the larger community and social partners?

Language learners, researchers and native speakers will benefit from workshops where I will share the output of this work. For instance, there are ongoing plans with UBC Language Sciences to spearhead the planning and organization of a mini-conference related to my research. Active engagements with different organizations interested in Africa NLP will also provide a space for various stakeholders to interact with the people who would use the technologies that this work will enable. These will accelerate research on the languages selected and other indigenous languages (including Indigenous languages in Canada and the rest of the world). Since 2018, I have presented different parts of my work to the UBC community and at different external venues. Feedback from these presentations have been encouraging and I am committed to further public engagements.

Why did you decide to pursue a graduate degree?

During my undergraduate degree in Linguistics, I developed a dictionary and performed some morphological analysis on Ojirami - a minority language spoken in the Akoko Edo area of Nigeria. I used some computing tools for my research and I really enjoyed the experience. I especially enjoyed the opportunity to prescribe the language's first orthography and develop the first dictionary for the language. It was a beautiful experience presenting this work to the Ojirami community and National Institute for Nigerian languages. I became very interested in computational linguistics particularly for low resource languages from that moment and I decided to pursue a graduate degree to develop the requisite skills.

Why did you choose to come to British Columbia and study at UBC?

UBC was the only school I found that readily provided the type of interdisciplinary support that I needed. Most computational linguistic programs usually focus on either computer science or linguistics and I wanted both. I have been able to develop both linguistic and computing skills on this program so far. I love the diversity, the flexibility, the program structure, and the level of support available to help students succeed. I also love the faculty members, and their genuine interest in student's success.